How to Crop a Picture in Microsoft Word |

- How to Crop a Picture in Microsoft Word

- How to Automatically Turn off a Mac Keyboard’s Backlight After Inactivity

- GeForce RTX 3080 vs. Radeon RX 6800 XT

- How to Make Chrome Always Open Your Previously Open Tabs

- What Is an “Uncertified” Android Device?

| How to Crop a Picture in Microsoft Word Posted: 03 Feb 2021 08:23 AM PST

You can remove unnecessary pixels from an image directly in Microsoft Word using the built-in cropping tool. You can also crop a photo to fit a specific shape. Here's how to crop a picture in Microsoft Word. How to Crop a Picture in WordTo crop a picture in Microsoft Word, open the Word document, add an image (Insert > Pictures), and then select the photo by clicking it.

Next, go to the "Picture Format" tab, which appears after you select the image. Then, in the "Size" group, click "Crop."

In the drop-down menu that appears, select "Crop."

Cropping handles will now appear around the frame of the image. To crop out certain areas, click and drag the handles to capture only the content that you want to keep.

After setting the cropping frame, click the icon in the upper half of the "Crop" option in the "Size" group of the "Picture Format" tab.

The unwanted areas of your image are now removed.

How to Crop a Picture as a Shape in WordTo crop an image as a shape, open the Microsoft Word application, insert an image (Insert > Pictures), and then select the image by clicking it.

In the "Picture Format" tab, which appears after selecting the image, click the "Crop" button found in the "Size" group. In the drop-down menu that appears, select "Crop To Shape."

A sub-menu displaying a large library of shapes will appear. Select the shape that you'd like to crop the image as by clicking it. We'll use the teardrop shape in this example.

Your image will now be cropped as the selected shape automatically.

This is just one of many photo editing tools available in Microsoft Word. You can also do things such as removing the background from an image, annotating an image, and more. RELATED: How to Remove the Background from a Picture in Microsoft Word | |||||||||||||||||||||||||||||||||||||||

| How to Automatically Turn off a Mac Keyboard’s Backlight After Inactivity Posted: 03 Feb 2021 07:10 AM PST  The keyboard backlight feature on Apple MacBooks is useful when you're working late nights or in dark rooms. But it's easy to forget about it and it could drain your battery. Here's how to automatically disable the Mac keyboard backlight after inactivity. You can set up a feature from the System Preferences menu on your Mac that will automatically disable the keyboard backlight after you have stopped using your computer for a couple of minutes. To get started, click the Apple icon found in the top-left corner of the menu bar. From there, choose the "System Preferences" option.

Now, go to the "Keyboard" section.

From the "Keyboard" tab, click the checkmark next to the "Turn Keyboard Backlight Off After 5 Secs of Inactivity" option.

Click the "5 Secs" dropdown to increase the time limit to up to five minutes.

And that's it. The next time you step away from your Macbook, the illuminated keyboard won't sip away at your computer's built-in battery. Just switched from Windows and wondering where your Mac's Control Panel is? Well, it's called System Preferences, and here's how it works. RELATED: Where Is the Control Panel on a Mac?

| |||||||||||||||||||||||||||||||||||||||

| GeForce RTX 3080 vs. Radeon RX 6800 XT Posted: 02 Feb 2021 11:14 AM PST Due to popular demand we’re following up to our comparison of the RTX 3070 and RX 6800 from a few weeks back. This latest GPU shootout sees the faster and more expensive GeForce RTX 3080 pitted against the Radeon RX 6800 XT. We have a long list of games to be tested covering the 1080p, 1440p and 4K resolutions. But before we get to that, a quick disclaimer: yes, we know availability for both graphics cards is terrible, especially the Radeon which is almost non-existent based on what we're hearing from retailers. The RTX Ampere GPUs are coming in slowly, but supply is nowhere near it needs to be, so shortages will continue for the foreseeable. As we reported last week, scalpers have been a major factor in the equation unfortunately.

Still, there's a chance if you’re patient enough, you'll eventually get your hands on one of these GPUs, and of course we're hoping availability improves soon. Whenever that time comes, this review will help answer the question of which one should you buy?

Since we first tested the GPUs, we’ve updated the our test rig, moving away from the Ryzen 9 3950X to the newer 5950X, though we’re sticking with the DDR4-3200 CL14 dual-rank memory as it's quite a bit faster than even DDR4-3800 single rank. We have also disabled stuff like Smart Access Memory unless specified otherwise. Representing the GeForce team is the RTX 3080 Founders Edition graphics card, while the Radeon GPU will be represented by AMD's own reference card. Both were tested at stock settings with no overclocking. As usual, of the 30 games we tested we’ve picked around half a dozen of the most interesting results before we jump into breakdown graphs to summarize all the data. BenchmarksStarting with Battlefield V we see that the Radeon GPUs have a significant performance advantage in this title, so much so that the RX 6800 is able to beat the RTX 3080 at 1080p and 1440p. At 1440p the 6800 XT is 20% faster than the RTX 3080, a massive performance difference considering they occupy a similar price point, or are at least are meant to.

The Ampere architecture does scale better at 4K, or at least the higher core count parts like the RTX 3080 do. This isn't so much a memory bandwidth issue for the RDNA 2 GPUs as people often claim, rather it's a case of the RTX 3080 and its underlying architecture coming to life at this higher resolution. Whatever the reason, the fact remains that the RTX 3080 often performs better at 4K and here we're seeing it come from 17% behind the 6800 XT at 1440p to 6% faster. It’s not a big difference in this title, but the GeForce GPU shows it’s the better performer at 4K.

Hitman 2 is mostly CPU limited at 1080p, so we can skip those results and move to 1440p. Here the RTX 3080 and 6800 XT are evenly matched with the GeForce GPU winning by a slim 6% margin. That difference is increased at 4K to just 7%, making the RTX 3080 slightly faster in Hitman 2.

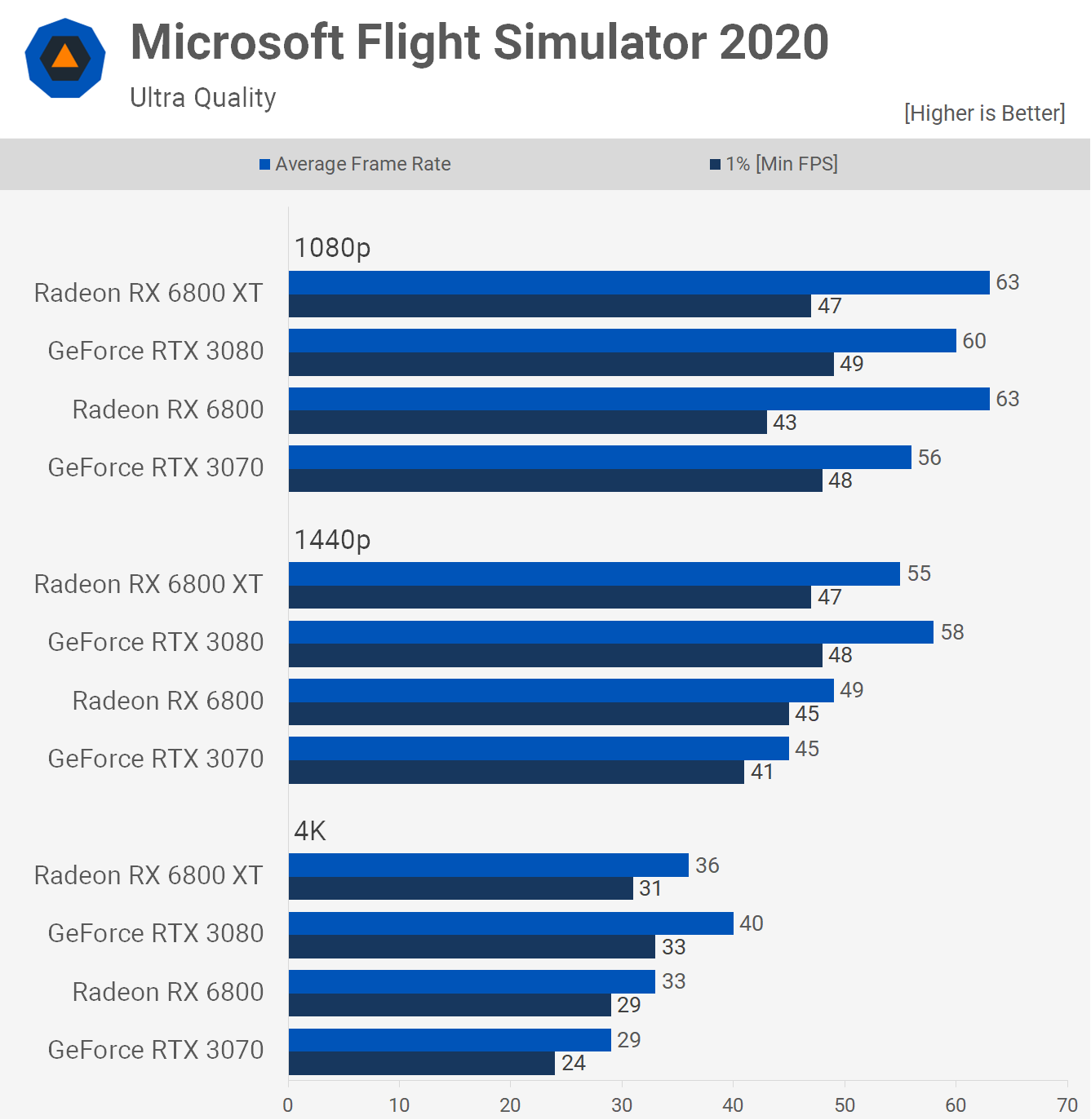

Microsoft Flight Simulator 2020 is also CPU limited at the lower resolutions and pushing past 60 fps when flying over densely populated areas is very difficult. The 6800 XT is just 3fps faster at 1080p on average. The RTX 3080 then pulls ahead at 1440p to win by a 5% margin and then a more significant 11% at 4K allowing the GeForce GPU to reach 40 fps which does make the game noticeably smoother.

Next up we have Borderlands 3 and here the Radeon GPUs perform quite well. The 6800 XT is 7% faster than the 3080 at 1440p and 8% faster at 1080p, not massive margins by any means but a clear win for the 6800 XT. Once again though, as we reach 4K, the 3080 catches up and this time matches the 6800 XT with 66 fps.

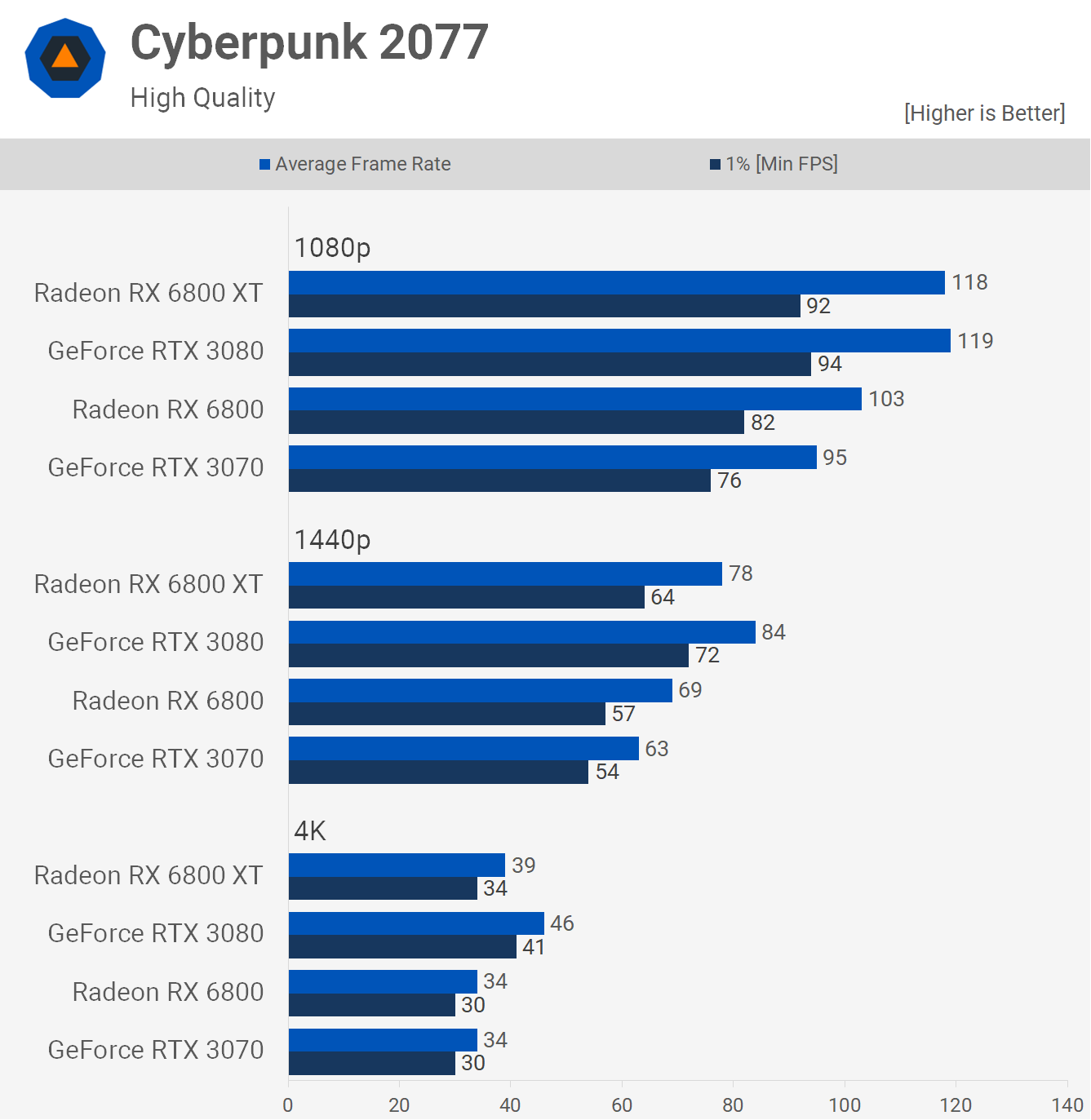

As we know from recent benchmarks focusing on Cyberpunk 2077 performance, the GeForce GPUs have a slight performance advantage in this title everything being equal. Given this title was sponsored by Nvidia, the performance is acceptable overall on the Radeon side. For example, the RTX 3080 was 8% faster at 1440p, but a much more substantial 18% at 4K. Turn on DLSS (we have a dedicated article for that) and the GeForce delivers an entirely superior class of performance.

Control is another title where Nvidia had close involvement on optimizing it with ray tracing and DLSS. Without utilizing those features, the RTX 3080 is 13% faster at 1440p which is a big performance increase and as expected this margin only increased at 4K, blowing out to an 18% advantage in Nvidia's favor. DLSS 2.0 is a key factor for playing Control using an RTX GPU. For example, the RTX 3080 goes from a 60 fps average to 90 fps if you enable DLSS. Then using both ray tracing effects and DLSS gets you down to about the same 60 fps average with better graphics in this particular title and solid implementation of ray tracing.

Red Dead Redemption 2 sees the GPUs matching each other’s performance at 1080p and 1440p. Then at 4K, the RTX 3080 is able to pull ahead, beating the 6800 XT by a convincing 15% margin.

The Outer Worlds was built using Unreal Engine 4 which typically favors Nvidia hardware, so it comes as little surprise to see the RTX 3080 pulling well ahead of the 6800 XT, even at 1080p. At 1440p the GeForce was 15% faster and that margin blows out to a whopping 21% at 4K giving Nvidia a significant performance advantage in this game.

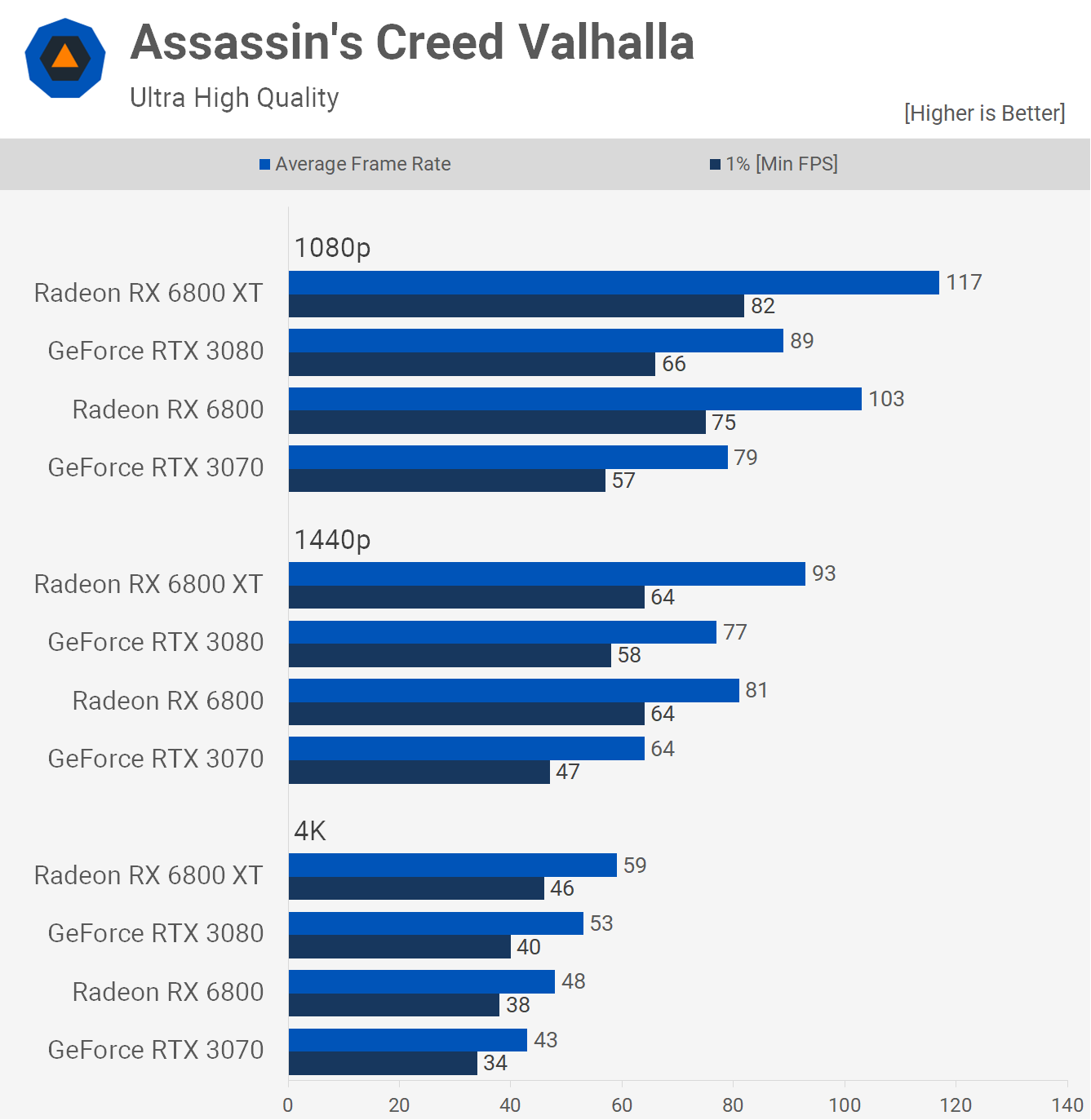

There are plenty of new AMD sponsored games as well. Such is the case of Assassin's Creed Valhalla. AMD's investment has paid off as the 6800 XT is a full 31% faster at 1080p, 21% faster at 1440p and 11% faster at 4K.

F1 2020 is an Nvidia Gameworks title but it's always run well on AMD hardware. Here we can see the 6800 XT offers slightly more frames at 1080p and 1440p, though it’s a mere 6% advantage at 1440p. At 4K results are more evenly matched.

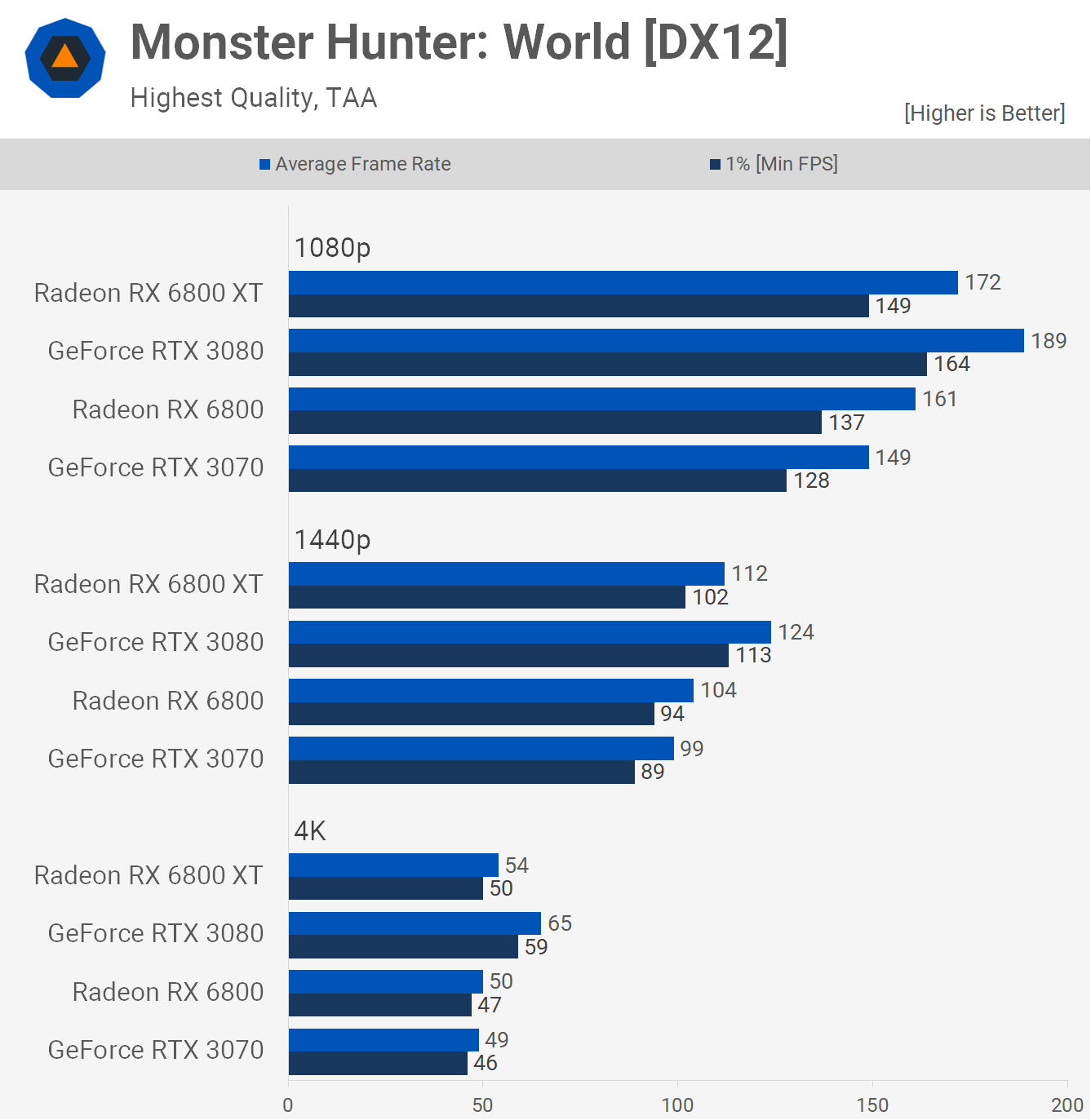

Monster Hunter World sees the RTX 3080 performing exceptionally well. Even at 1080p it was 10% faster than the 6800 XT, 11% faster at 1440p and 20% faster at 4K.

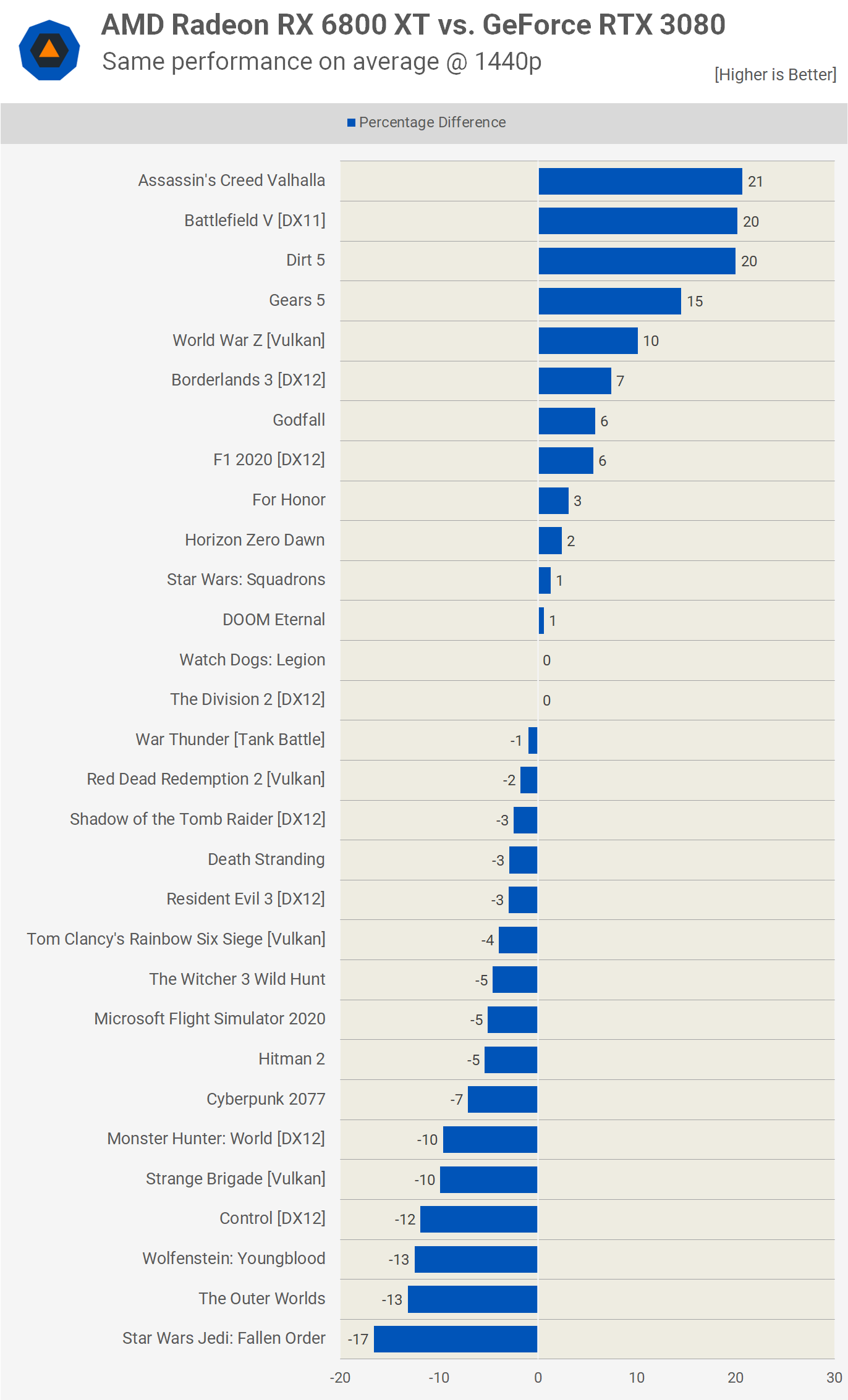

Last up are Doom Eternal results and while the margins here are probably meaningless given we're talking about well over 150 fps even at 4K, let's quickly go over the results anyway. The 6800 XT was barely any faster at 1080p with 412 fps on average. It’s only at 4K where the 3080 takes charge to win by an 11% margin. 30 Game SummarySo far the Radeon RX 6800 XT and GeForce RTX 3080 looked competitive at 1440p, while the GeForce appears more dominant at 4K. Let’s take a look at the full list of games we tested to see how the GPUs compare overall.

Starting with the 1440p data, we see that these GPUs are indeed even at 1440p. At most the 6800 XT was up to 20% faster, but we also saw instances where it was 13-17% slower. For half the games tested the margin was 5% or less, which we deem a draw. There were just 11 games out of 30 where the margin was 10% or greater in either direction.

Things become more clear cut at 4K. Here the 6800 XT was on average 6% slower and we're looking at just a few titles where the Radeon GPU came out on top, most of which are AMD sponsored titles. This time there are 14 games where the 6800 XT was slower by a 10% margin or greater, making the RTX 3080 the superior choice for 4K gaming. This is also in line with our findings in our day-one reviews of both GPUs (tested with the R9 3950X). Even if the list of games were not the same (or the same quantity of titles), on average the conclusions we could draw from those results were the same. What We LearnedAll that testing later, we have a solid idea of how the Radeon RX 6800 XT and GeForce RTX 3080 compare across a huge number of games. Now the question is which one should you buy?… once availability improves. It’s been two months since the Radeon RX 6800 series launch and AMD assured us that in 4 to 8 weeks it would be possible to purchase AIB cards at the suggested retail price: $580 for the RX 6800, and $650 for the RX 6800 XT. Obviously that hasn't happened, and availability has become a bigger issue than pricing, even though cards are grossly overpriced and out of stock at nearly all times. Therefore it's time to call it: AMD has failed to deliver. We've heard there's virtually no supply of 6800 series GPUs coming in and the situation is worse than the one presented by Nvidia. On the Nvidia side, at least there are RTX 3080 AIB models listed at the MSRP, even if they are out of stock. Whereas the 6800 XT's appear to be $200+ over the MSRP, there are a number of 3080's priced within $100 of the MSRP. So supply and pricing on Nvidia's end appears to be better, though still a dire situation. Frankly the 6800 XT isn't worth $850 and you absolutely shouldn't pay that much for one. We'd rather spend $150 on something like a second hand RX 570 and play some older games, or esports titles with lower quality visuals and wait it out. That's the situation as of writing, but what about a future where both the RTX 3080 and 6800 XT are available at the MSRP, which is better value then? If you're into streaming and wish to use your GPU for all the heavy lifting, then the RTX 3080 is the obvious choice, too. AMD offers only poor encoding support which is something they should have addressed by this point, especially if they want to charge top dollar for their GPUs. The Radeon has the upperhand on VRAM buffer, though the RTX 3080 does very well at 4K right now with 10 GB of memory and we don’t see this being an issue any time soon. In terms of cost per frame, both are similar. Features like ray tracing support and DLSS can make the RTX 3080 more appealing to you, especially if the games you play use those features. In fact, if you care about ray tracing, the RTX 3080 is a much better option. Although we didn’t spend much time showing how the two GPUs compare in this regard, we have dedicated articles about this, and we’re particularly enthusiastic about DLSS 2.0 support coming to more games. As we've noted in the past, the list of games that support ray tracing is slowly growing, but there are few games where the feature truly shines. Games such as Control, Watch Dogs Legion, Cyberpunk 2077 and Minecraft are the best showcases, whereas a Fortnite-style game, Call of Duty and Battlefield V, are not as well suited to it given their fast paced gameplay and need for top FPS. You will almost always want to enable DLSS 2.0 in any game that supports it though. This is something you'd likely enable in Fortnite, for example. How useful DLSS is both game and resolution dependent. Typically we see reviewers evaluating DLSS in games like Cyberpunk 2077 using an RTX 3080 at 4K, and this is by far the best way to showcase the technology. As you lower the resolution, even to 1440p, the image becomes slightly blurry as DLSS has less data to work with and at 1080p, even in Cyberpunk 2077, the quality DLSS option isn't great and in our option noticeably worse than native 1080p. With that said, we consider DLSS is a strong selling point of RTX Ampere GPUs and it's something AMD will need to counter sooner rather than later. As for Smart Access Memory, which right now is only enabled by AMD on Zen 3 setups — we've dedicated an article to it as well. The consensus appears to be that until the technology is universally supported and enabled by default, we shouldn't test with it. Although SAM can be enabled for all games, only some see a noteworthy performance uplift, others little or even a slight decline. With SAM enabled, the 6800 XT average performance is only improved by 3%. TL;DR: The RTX 3080 and RX 6800 XT are evenly matched, but overall the GeForce RTX 3080 has the upperhand in this battle. New technologies such as ray tracing and DLSS make it harder than ever to make a concise GPU recommendation, but hopefully our thorough testing can help you narrow down your choice between the two. Shopping Shortcuts: | |||||||||||||||||||||||||||||||||||||||

| How to Make Chrome Always Open Your Previously Open Tabs Posted: 02 Feb 2021 10:13 AM PST

We've all been there—you're in the middle of a complex task in Google Chrome, but you need to restart or log out. Luckily, with one quick settings change, Chrome can remember all of your tabs and reload them automatically the next time you start your browser. Here's how to set it up. First, open Chrome. In any window, click the three vertical dots button in the upper-right corner and select "Settings" from the menu.

In the "Settings" tab, select "On startup" in the sidebar.

In the "On startup" section, select the radio button beside "Continue where you left off."

After that, close the "Settings" tab. The next time you restart Chrome, all of your tabs will open again exactly where you left off. And if you ever want to experiment, you can also make Chrome launch with a set of favorite pages every time in the same "On startup" page in Chrome Settings. Just select "Open a specific page or set of pages" instead. Very handy! Of course, if you want Chrome to always open with a fresh, empty browser state, you can head back to this screen and select "Open the New Tab page" instead.

| |||||||||||||||||||||||||||||||||||||||

| What Is an “Uncertified” Android Device? Posted: 02 Feb 2021 08:57 AM PST

A great thing about Android is its open nature. Any company can take Android's open-source code and put it on a device. This doesn't come without problems, though. Devices can be "uncertified" and lose access to some features. What does that mean? Android devices can look wildly different depending on the customizations that the manufacturer makes. However, as different as they may look, Google wants to ensure some level of consistency across devices, both in functionality and security. RELATED: What Are Android Skins? Google has a list of requirements called the Compatibility Definition Document (CDD). These requirements must be met in order for a device to pass the Compatibility Test Suite (CTS) and be certified. What Happens With an Uncertified Device?Uncertified Android devices are very rare. The most common situation that leads to an uncertified device is rooting or custom ROMs. If you happen to get your hands on an uncertified device, there are a few things you should know about it. In 2018, Google began warning users during the setup process that their device is not certified by Google. The user is still able to set up the phone and use it, but they can't access the Google Play Store. First and foremost, Google can't ensure that the device is secure. These devices may not receive routine updates, which are critical for security. Without Play Protect, there's no certification that the Google apps on the device are real Google apps. These apps and features may also not work correctly. If your device somehow still managed to have Google apps pre-installed, Google can shut them down. For example, as of March 2021, the Google Messages app doesn't work on uncertified devices. How to Check if Your Android Phone Is UncertifiedAs previously mentioned, the vast majority of Android users don't have to worry about their device being "uncertified." In fact, if your device came with the Google Play Store, it's almost certainly certified. Here's how you can check. Open the Google Play Store on your Android phone or tablet. Tap the hamburger menu icon to open the sidebar menu.

Select "Settings" from the list.

Scroll down to the "About" section. Under "Play Protect Certification," it will say whether your device is certified or uncertified.

That's it! If you find that your device is uncertified and you haven't modified it, you can check Google's list of supported Android devices to see if yours is included. |

| You are subscribed to email updates from My Blog. To stop receiving these emails, you may unsubscribe now. | Email delivery powered by Google |

| Google, 1600 Amphitheatre Parkway, Mountain View, CA 94043, United States | |

0 nhận xét:

Đăng nhận xét